#include <mlmodel_client.hpp>

|

|

using | interface_type = MLModelService |

| |

|

using | service_type = viam::service::mlmodel::v1::MLModelService |

| |

|

template<typename T > |

| using | tensor_view = typename make_tensor_view_<T>::type |

| |

| using | signed_integral_base_types |

| |

| using | unsigned_integral_base_types |

| |

| using | integral_base_types |

| |

|

using | fp_base_types = boost::mpl::list<float, double> |

| |

|

using | base_types = boost::mpl::joint_view<integral_base_types, fp_base_types> |

| |

| using | tensor_view_types |

| |

|

using | tensor_views = boost::make_variant_over<tensor_view_types>::type |

| |

|

using | named_tensor_views = std::unordered_map<std::string, tensor_views> |

| |

|

|

| MLModelServiceClient (std::string name, const ViamChannel &channel) |

| |

|

const ViamChannel & | channel () const |

| |

| std::shared_ptr< named_tensor_views > | infer (const named_tensor_views &inputs, const ProtoStruct &extra) override |

| | Runs the model against the input tensors and returns inference results as tensors.

|

| |

| struct metadata | metadata (const ProtoStruct &extra) override |

| | Returns metadata describing the inputs and outputs of the model.

|

| |

| std::shared_ptr< named_tensor_views > | infer (const named_tensor_views &inputs) |

| | Runs the model against the input tensors and returns inference results as tensors.

|

| |

| virtual std::shared_ptr< named_tensor_views > | infer (const named_tensor_views &inputs, const ProtoStruct &extra)=0 |

| | Runs the model against the input tensors and returns inference results as tensors.

|

| |

|

struct metadata | metadata () |

| | Returns metadata describing the inputs and outputs of the model.

|

| |

| virtual struct metadata | metadata (const ProtoStruct &extra)=0 |

| | Returns metadata describing the inputs and outputs of the model.

|

| |

| API | api () const override |

| | Returns the API associated with a particular resource.

|

| |

| std::shared_ptr< named_tensor_views > | infer (const named_tensor_views &inputs) |

| | Runs the model against the input tensors and returns inference results as tensors.

|

| |

|

struct metadata | metadata () |

| | Returns metadata describing the inputs and outputs of the model.

|

| |

| Name | get_resource_name () const override |

| | Returns the Name for a particular resource.

|

| |

|

| Resource (std::string name) |

| |

|

virtual std::string | name () const |

| | Return the resource's name.

|

| |

|

void | set_log_level (log_level) const |

| | Set the log level for log messages originating from this Resource.

|

| |

|

|

template<typename T > |

| static tensor_view< T > | make_tensor_view (const T *data, std::size_t size, typename tensor_view< T >::shape_type shape) |

| |

|

| MLModelService (std::string name) |

| |

|

| Service (std::string name) |

| |

|

Name | get_resource_name (const std::string &type) const |

| |

|

LogSource | logger_ |

| |

An MLModelServiceClient provides client-side access to a remotely served ML Model Service. Use this class to communicate with MLModelService instances running elsewhere.

◆ infer() [1/3]

| std::shared_ptr< named_tensor_views > viam::sdk::MLModelService::infer |

( |

const named_tensor_views & | inputs | ) |

|

|

inline |

Runs the model against the input tensors and returns inference results as tensors.

- Parameters

-

| `inputs` | The input tensors on which to run inference. |

- Returns

- The results of the inference as a shared pointer to

named_tensor_views. The data viewed by the views is only valid for the lifetime of the returned shared_ptr.

◆ infer() [2/3]

| std::shared_ptr< named_tensor_views > viam::sdk::impl::MLModelServiceClient::infer |

( |

const named_tensor_views & | inputs, |

|

|

const ProtoStruct & | extra ) |

|

overridevirtual |

Runs the model against the input tensors and returns inference results as tensors.

- Parameters

-

| `inputs` | The input tensors on which to run inference. |

| `extra` | Any additional arguments to the method. |

- Returns

- The results of the inference as a shared pointer to

named_tensor_views. The data viewed by the views is only valid for the lifetime of the returned shared_ptr.

Implements viam::sdk::MLModelService.

◆ infer() [3/3]

| virtual std::shared_ptr< named_tensor_views > viam::sdk::MLModelService::infer |

( |

const named_tensor_views & | inputs, |

|

|

const ProtoStruct & | extra ) |

|

virtual |

Runs the model against the input tensors and returns inference results as tensors.

- Parameters

-

| `inputs` | The input tensors on which to run inference. |

| `extra` | Any additional arguments to the method. |

- Returns

- The results of the inference as a shared pointer to

named_tensor_views. The data viewed by the views is only valid for the lifetime of the returned shared_ptr.

Implements viam::sdk::MLModelService.

◆ metadata() [1/2]

Returns metadata describing the inputs and outputs of the model.

- Parameters

-

| `extra` | Any additional arguments to the method. |

Implements viam::sdk::MLModelService.

◆ metadata() [2/2]

Returns metadata describing the inputs and outputs of the model.

- Parameters

-

| `extra` | Any additional arguments to the method. |

Implements viam::sdk::MLModelService.

The documentation for this class was generated from the following file:

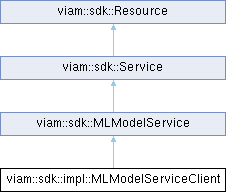

Inheritance diagram for viam::sdk::impl::MLModelServiceClient:

Inheritance diagram for viam::sdk::impl::MLModelServiceClient: Public Types inherited from viam::sdk::MLModelService

Public Types inherited from viam::sdk::MLModelService Public Member Functions inherited from viam::sdk::MLModelService

Public Member Functions inherited from viam::sdk::MLModelService Public Member Functions inherited from viam::sdk::Service

Public Member Functions inherited from viam::sdk::Service Public Member Functions inherited from viam::sdk::Resource

Public Member Functions inherited from viam::sdk::Resource Static Public Member Functions inherited from viam::sdk::MLModelService

Static Public Member Functions inherited from viam::sdk::MLModelService Protected Member Functions inherited from viam::sdk::MLModelService

Protected Member Functions inherited from viam::sdk::MLModelService Protected Member Functions inherited from viam::sdk::Service

Protected Member Functions inherited from viam::sdk::Service Protected Member Functions inherited from viam::sdk::Resource

Protected Member Functions inherited from viam::sdk::Resource Protected Attributes inherited from viam::sdk::Resource

Protected Attributes inherited from viam::sdk::Resource